As you already know, with the new DBpedia strategy our mode of publishing releases changed. The new DBpedia release process follows a three-step approach starting from the Extraction to ID-Management towards the Fusion, which finalizes the release process. Our DBpedia releases are currently published on a monthly basis. In this post, we give you insight into the single steps of the release process and into what our developers actually do when preparing a DBpedia release.

Extraction – Step one of the Release

The good news is, our new release mode is taking shape and noticeable picked up speed. Finally the 2018-08 and, additionally the 2018.09.12 and the 2018.10.16 Releases are now available in our LTS repository.

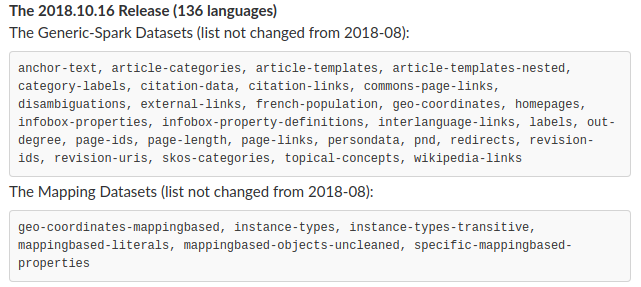

The 2018-08 Release was generated on the basis of the Wikipedia datasets extracted in early August and currently comprises 136 languages. The extraction release contains the raw extracted data generated by the DBpedia extraction-framework. The post-processing steps, such as data-deduplication or URI-normalization are omitted and moved to later parts of the release process. Thus, we can provide direct, transparent access to the generated data in every step. Until we manage two releases per month, our data is mostly based on the second Wikipedia datasets of the previous month. In line with that, the 2018.09.12 release is based on late August data and the recent 2018.10.16 Release is based on Wikipedia datasets extracted on September 20th. They all comprise 136 languages and contain a stable list of datasets since the 2018-08 release.

Our releases are now ready for parsing and external use. Additionally, there will be a new Wikidata-based release this week.

ID-Management – Step two of the Release

For a complete “new DBpedia” release the DBpedia ID-Management and Fusion of the data have to be added to the process. The Databus ID Management is a process to unify various different IRIs identifying the same entities coined from different data providers. Taking datasets with overlapping domains of interest from multiple data providers, the set of IRIs denoting the entities in the source datasets are determined heuristically (e.g. excluding RDF/OWL types/classes).

Afterwards, these selected IRIs a numeric primary key, the ‘Singleton ID’. The core of the ID Management process happens in the next step: Based on the large set of owl:sameAs assertions in the input data with high confidence, the connected components induced from the corresponding sameAs-graph is computed. In other words: The groups of all entities from the input datasets (transitively) reachable from one to another are determined. We dubbed these groups the sameAs-clusters. For each sameAs-cluster we pick one member as representant, which determines the ‘Cluster ID’ or ‘Global Identifier’ for all cluster members.

Apart from being an essential preparatory step for the Fusion, these Global Identifiers serve purpose in their own right as unified Linked Data identifiers for groups of Linked Data entities that should be viewed as equivalent or ‘the same thing’.

A processing workflow based on Apache Spark to perform the process described on above for large quantities of RDF input data is already in place and has been run successfully for a large set of DBpedia inputs consisting of:

- the results of the aforementioned 2018-08 release

- mapping-based extractions from 2016-10

- the sameAs-linksets from the DBpedia Wikidata dataset (http://downloads.dbpedia.org/repo/lts/wikidata/) as ‘glue’ for additional linkage between the different language versions of DBpedia

Fusion – Step three of the Release

Based on the extraction and the ID-Management, the Data Fusion finalizes the last step of the DBpedia release cycle. With the goal of improving data quality and data coverage, the process uses the DBpedia global IRI clusters to fuse and enrich the source datasets. The fused data contains all resource of the input datasets. The fusion process is based on a functional property decision to decide the number of selected values ( owl:FunctionalProperty determination ). Further, the value selection for this functional properties is based on a preference dependent on the originated source dataset. For example, preferred values for En-DBpedia over DE-DBpedia.

The enrichment improves entity-properties and -values coverage for resources only contained in the source data. Furthermore, we create provenance data to keep track of the origin of each triple. This provenance data is also used for the http-based http://global.dbpedia.org resource view.

At the moment the fused and enriched data is available for the generic, and mapping-based extractions. More datasets are still in progress. The DBpedia-fusion data is uploading to http://downloads.dbpedia.org/repo/dev/fusion/

Please note we are still in the midst of the beta testing for our data release tool, so in case you do come across any errors, reporting them to us is much appreciated to fuel the testing process.

Further information regarding the releases progress can be found here: http://dev.dbpedia.org/

Next steps

We will add more releases to the repository on a monthly basis aiming for a bi-weekly release mode as soon as possible. In between the intervals, any mistakes or errors you find and report in this data can be fixed for the upcoming release. Currently, the generated metadata in the DataID-file is not stable. This will fluctuate, still needs to be improved and will change in the near future. We are now working on the next release and will inform you as soon as it is published.

Yours DBpedia Association

This blog post was written with the help of our DBpedia developers Robert Bielinski, Markus Ackermann and Marvin Hofer who were responsible for the work done with respect to the DBpedia releases. We like to thank them for their great work.

- Did you consider this information as helpful?

- Yep!Not quite ...